01 October 2017

Stulz’s CyberRack heat exchanger is designed to replace the back panel of a rack.

The amount of electricity consumed by the global IT sector has increased by six per cent (totalling 21 per cent) since 2012, according to a Greenpeace report published earlier this year (Clicking Clean: Who is winning the race to build a green internet?). And with Cisco’s latest Visual Networking Index forecasting a three-fold increase in worldwide IP traffic over the next few years to reach an annual run rate of 3.3ZB by 2021, are the challenges for maintaining energy efficiency in data centres set to get worse?

“Yes. Dependence on today’s data centres and communications networks has never been more critical,” says Stu Redshaw, CTO of data centre thermal risk specialist EkkoSense. “And with factors such as cloud, Big Data, mobile and the rise of IoT-powered services quickly becoming the new normal, there are going to be entirely new levels of demand placed on corporate data centres. If we don’t start addressing thermal optimisation and energy efficiency now, it’s only going to become a bigger and bigger problem.”

Simon Brady, optimisation programme manager, EMEA for Vertiv (formerly Emerson Network Power), says that while the industry is making considerable progress on efficiency, the issue is managing efficiency through growth.

“There is a lot of empty physical capacity in data centres across the UK and Europe. This space, in theory, looks like compelling square footage for data centres to expand into to meet the ongoing growth in cloud and edge computing. However, the problem with this approach is that much of this space was built five to ten years ago, when facilities needed higher levels of availability and lower density levels than we do now. In short, the infrastructure in these facilities is no longer suitable for future growth.”

Commenting on the Greenpeace report, The Green Grid says many organisations have sought individual goals rather than working together to share best practice and find the best ways to a sustainable future. Roel Castelein, the industry body’s EMEA marketing chair, says: “The growth in the amount of data demands that all data centre providers come together, rather than working in silos, and be clear in their use of renewable energy in creating a more sustainable industry.”

Jon Pettitt, VP of data centre cooling solutions EMEA and APAC at climate control innovator Munters, admits that with data centre builds multiplying at a rapid rate to keep up with digital demand, the amount of energy they need for cooling is huge and increasing, both in terms of cost and in environmental impact. But he points out that with the use of innovative energy efficient products and solutions, new build data centre’s are optimised to run most efficiently.

John Booth agrees. As well as chairing the Data Centre Alliance’s (DCA) energy efficiency steering committee, he is also vice chair of the British Computer Society’s Green IT specialist group and represents the organisation on the British Standards Institute IST/46 Sustainability. Booth is also the executive director of Sustainability for London and the technical director of the National Data Centre Academy, so he clearly knows a thing or two about the subject in hand.

Booth believes any company that is not building an energy efficient data centre nowadays really needs to get out of the business because it is not taking advantage of the newer technologies and concepts that have been available since 2008.

He adds that whilst the data centre sector is growing at CAGR of 18 per cent, this is largely driven by the adoption of cloud services, mobile networks, IoT and self-driving cars. Booth says these facilities will be inherently more efficient by design and operations. It is legacy data centres that form the bulk of the data centre estate globally, and these are the ones that therefore need to be addressed.

“The best way is by going back to school. The key to it all is education, and there are many training companies that provide courses on how to optimise data centres for energy efficiency. Essentially, a radical approach is required, both culturally and strategically.”

According to Booth, the EU Code of Conduct for Data Centres (Energy Efficiency) offers a “great” starting point: “The scheme has 153 energy efficiency best practices ranging from physical air flow to increasing temperatures. Further information and help is available from the DCA, and their energy efficiency and sustainability steering committee is connected to thought leaders and standards committees globally. Solutions include better design concepts, new equipment, and strategic/cultural change thought processes.”

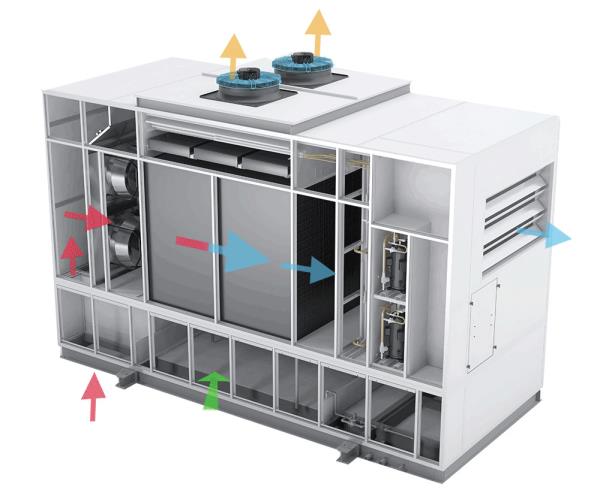

Munters claims its Oasis indirect evaporative cooling solutions can save up to 75 per cent in energy consumption compared to standard AC systems.

To PUE or not to PUE?

What metrics should be used to measure and monitor power efficiency in data centres? For example, is the PUE (power usage effectiveness) rating oft-cited by operators enough?

“While there are many issues with PUE – particularly when it comes to comparing performance across multiple data centre sites – what matters most is that data centres actually do measure and monitor their power and energy efficiency,” says Redshaw. “Concerns about specific measurement regimes can often lead to not measuring at all. Actual PUE measurements can no doubt deliver real benefits; they’re simple and the results are likely to be positive (providing data centre operators commit and remain committed to improving their PUE scores).”

Booth points out that PUE is an improvement metric for an individual data centre and should not be used for comparison as all facilities will have different design and operational parameters. “The other KPI metrics contained within the ISO 30134 series can assist. The real KPI of interest is one that is developed in-house and relates to your IT estate infrastructure and your business needs, for instance, establishing your core services against business need and improving the cost of delivery.”

Developed by The Green Grid and introduced in 2007, PUE describes the ratio of total amount of energy used by a data centre to the energy delivered to computing equipment. It was published in 2016 as a global standard under ISO/IEC 30134-2:2016 as well as a European standard under EN 50600-4-2:2016.

Vertiv’s Brady reckons PUE is often poorly calculated. “Although the ISO standard has outlined how PUE should be measured, many readings are being skewed by bad calculations or missing information – such as lighting or office heating being excluded. Before looking at alternative ways of measuring efficiencies, an accurate and consistent measurement of PUE is paramount for it to be of any use in the future.”

However, in an ideal world, Brady believes the industry would have more advanced metrics than PUE to measure efficiency. He says these should representhow much compute can be achieved against input power levels. “Temperature readings or flops per second could also be great insights into the efficiency of a data centre.”

Earlier this year in July, The Green Grid also said IT leaders must use a wide range of metrics to drive data centre efficiency. It said that the latest annual Data Centre Industry Survey from the Uptime Institute revealed that, in many cases, IT Infrastructure teams are still relying on the least meaningful metrics to drive efficiency.

“The majority of IT departments are positioning total data centre power consumption and total data centre power usage as primary indications of efficient stewardship of environmental and corporate resources,” stated The Green Grid.

In its own survey of 150 IT decision makers, The Green Grid found that while most recognise that a broad range of KPIs are useful in monitoring and improving their data centre efficiency, many are yet to implement them.

For instance, while 82 per cent said they viewed PUE as a valuable metric only 29 per cent used it; only 59 per cent used DCiE (data centre infrastructure efficiency) despite 80 per cent regarding it as useful; 71 per cent said temperature monitoring was useful but only 16 per cent took advantage of it; etc.

Castelein says the reason for limited adoption may come down to the perception that implementation will have a negative impact on capex and opex. “This doesn’t have to be the case. With enough resourcefulness and data centre knowhow, you don’t necessarily have to be a big spender to increase your data centre efficiency and therefore save money and do less harm to the environment. Oversimplifying or even focusing on a single metric can create wider business issues as key factors are ignored.”

He continues by saying data centre providers need to harness an array of metrics in order to gain a holistic view of their facilities and to drive environmental stewardship.

“Poor measurement is just as bad as no measurement at all. Therefore, IT leaders need to expand from a single-metric view and include broader technical metrics and KPIs into a meaningful message.”

Booth supports this view when he says: “The trick is to take a holistic view as the implementation of a new technology will rarely improve things unless the intangible policies, processes and procedures are adopted together with the new technology.”

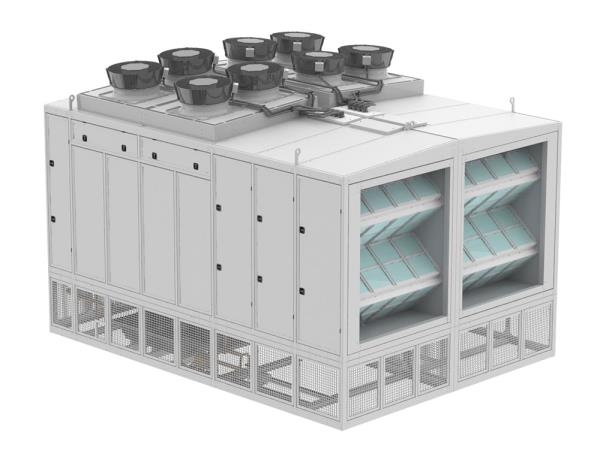

Schneider Electric says indirect air economisation systems like its new Ecoflair can be deployed regardless of most environmental or climactic conditions.

Power monitoring

With so many products purporting to have ‘green’ credentials, how should data centre managers go about choosing the right equipment for energy efficiency and what are the pitfalls to avoid?

For new environments, Booth says the EUCOC and EN50600 series of data centre design, build and operate standards are “must haves”, and that there are plenty of “very good data centre design and building companies working on the bleeding edge” that can help. He adds: “Legacy environments can prove difficult as dangers exist in adopting the EUCOC best practices. But again, the use of experts in the field will prove worthwhile. The EUCOC provides a roadmap of what to do and when.”

EkkoSense’s Redshaw says that getting hold of an accurate, up-to-date picture of what’s actually going on in the data centre has always proved difficult. However, when a data room is carefully mapped with all the appropriate data fields – power capacity, space capacity and cooling capacity – he reckons a new level of understanding and efficiency becomes possible.

“So you need to take advantage of today’s cost-effective IoT-enabled sensors, you need to be using the latest 3D thermal visualisation and monitoring software, and you also need proven expertise to interpret this data and optimise thermal performance. Only then can you really start to unlock benefits in terms of reducing data centre risk, securing significant energy savings and increasing your levels of cooling capacity.”

One seemingly simple solution to help with power monitoring is the intelliAmp from Marlborough-based equipment specialist, Jacarta.

“It obviously makes sense to have a detailed understanding of data centre power usage in order to make energy savings where possible in the future,” says Jacarta marketing director Colin Mocock. “The problem is that the implementation of an effective power monitoring solution usually requires extensive disruption to the network and considerable downtime.”

The intelliAmp is a small sensor that can be clipped onto 16 and 32 Amp rack input power cables to monitor the current flowing through them without the need to shut-off servers or any other equipment. Once the device is installed, Jacarta says the power usage of each rack can be analysed and informed decisions can be taken to help manage power more efficiently going forward.

Mocock says intelliAmp can be installed in live environments and its small size and design means it can operate unobtrusively in the data centre environment. “It gives IT managers the ability to get meaningful power data right now rather than wait until there’s a major overhaul of their data centre,” he claims.

intelliAmp is supplied with a central monitoring system that can monitor several hundred sensors from a single IP address. Environmental sensors (temperature, humidity, etc.) can also be added if necessary.

Playing it cool

Castelein describes cooling as a key “chokepoint” in data centre efficiency, and says this is an area where significant cost savings and sustainability progress can be made.

Redshaw adds to this by saying it’s clear that the root cause of poor thermal performance in data centres is not one of limited cooling capacity but rather the poor management of airflow and cooling strategies. “The fact that thermal issues still account for almost a third of unplanned data centre outages would suggest that the 35 per cent of energy consumption that operators are currently spending on cooling simply isn’t doing the job it needs to,” he says.

According to Munters, 30 to 40 per cent of energy consumption in a typical data centre is attributed to cooling. It says thermal loads inside data centres and electronic enclosures must therefore be managed efficiently using solutions that minimise energy consumption.

“Using indirect evaporative cooling (IEC) is one approach that has the potential to address this issue where air quality also matters,” says Pettitt. “Munters’ Oasis range of IEC solutions can save up to 75 per cent in energy consumption compared to standard air-conditioning systems – the equivalent to the emission of 30,000 cars per data centre.”

He goes on to claim that data centres are also able to free-up electrical power for their core business and increase available power by 37 per cent when using this approach. This also enables operators to install more communication equipment without needing to invest in additional expensive power installations from utility companies.

Pettitt says the choice of direct or indirect air economisation for a data centre depends on their benefits, geographic location, capex, opex and availability risks. “The main barrier to using direct fresh air is the concern of air pollution and high humidity risks on server longevity, which is the biggest difference compared to indirect air economiser systems. So if you are in a high pollutant area, such as a city or near to an airport or crop farming location, you are most likely to choose a solution that does not allow dust and contaminants into the data hall.”

Schneider Electric says indirect air economisation can be deployed regardless of most environmental or climactic conditions relating to the data centre’s location. It says the technology is typically suitable for at least 80 per cent of all global locations.

Launched in March, Schneider’s Ecoflair Indirect Air Economizer uses proprietary polymer heat exchanger technology and features a tubular design that, according to the firm, prevents the fouling that commonly happens with plate-style heat exchangers. Furthermore, it reckons the polymer is corrosion-proof compared to designs that use coated aluminium which corrodes when wet or exposed to the outdoor elements.

Available in 250kW and 500kW modules, the heat exchanger is said to be particularly suited for colo facilities rated between 1 and 5MW (250kW modules), and large hyperscale or cloud data centres rated up to 40MW (500kW modules).

Schneider reckons the Ecoflair Indirect Air Economizers can reduce cooling operating costs by 60 per cent compared to legacy systems based on chilled water or refrigerant technologies. But other specialist vendors may not support this approach.

For example, late last year Vertiv unveiled a new thermal management solution that integrates rack and cooling technologies into a single unit. Designed with two different architectures – closed loop and hybrid loop – the firm says the Liebert DCL puts chilled water cooling capabilities very close to the heat source. It reckons this optimises the amount of air needed for cooling which increases energy efficiency and cost savings.

Nicola Domenighini, technical sales manager with mission critical cooling solutions provider Stulz UK, says that if mechanical cooling is replaced with solutions such as free cooling options, opex savings of up to 65 per cent can be achieved. “For example, 15 years ago, using the energy efficiency ratio (EER is the ratio of cooling capacity to power consumption), the value for typical air-cooled chiller was around 2.5-2.7 which is nowadays 3.2-3.7 due to new technologies and/or design concepts.”

Stulz is also using chilled water for its latest heat exchanger, the CyberRack Active Rear Door which is designed to replace the back panel of a rack. By mounting the heat exchanger door directly on the rack, the firm says heat transfer takes place inside the server cabinet, isolated from the ambient environment. It reckons this allows a “considerably” higher temperature on the air and chilled water side than in other room cooling systems, where the heat is first emitted into the room and then cooled.

Two versions of the CyberRack are available with a cooling capacity of 19 or 32 kW. They include up to five EC fans to ensure an optimum airflow. Stulz says cooling capacity is automatically adapted to the heat load of the servers, either directly through continuous analysis of the measured temperatures, or indirectly via differential pressure control. In the latter instance, the speed of the fans is adjusted in line with the airflow of the servers’ own fans.

Thanks to its individual adapter frame, Stulz says the CyberRack can be installed in all commonly available 19-inch cabinets. Frame models are available in heights of 42U and 48U, and widths of 600mm and 800mm. The company adds that space in the rack remains fully available for IT equipment, and that no repositioning of server racks is needed.

According to Rittal, expanding a small-scale, air-cooled IT hardware environment to create a multi-enclosure facility often calls for a new cooling strategy. “The first and most fundamental question is whether water-based or refrigerant-based cooling is more appropriate,” states the firm. “It also makes sense to understand the total cost of ownership – including both capital expenditure and ongoing operating costs.”

As a result, Rittal has come up with two systems to deliver enclosure-based cooling via direct expansion (DX) units. It says the units are easy to install simply by mounting them on the side panels inside IT racks.

The firm believes DX solutions for cooling IT equipment are the quickest and easiest solutions to implement and require less capital expenditure than water-based ones. It explains that such solutions utilise conventional refrigerant-based cooling with a split system and a compressor. Cooling is via a closed-loop refrigeration cycle, featuring an evaporator, compressor, condenser and expansion valve.

Rittal’s LCU DX (Liquid Cooling Unit) offers enclosure-based cooling with DX units mounted inside 800mm wide racks. They are available with up to 6.5kW output in both single and dual redundancy variants. The system features horizontal air circulation, supporting the conventional method of front-to-back air flow to the 19-inch racks. Cold air is blown directly in front of the components. After being warmed by the servers, the air is drawn into the cooling unit at the rear of the enclosure and passes through the heat exchanger, which cools it down.

Rittal points out that this method requires IT enclosures that are sufficiently airtight, (such as its TS IT series), otherwise cold air will escape, impacting overall efficiency.

The second system is the LCP DX (Liquid Cooling Package). Suitable for 12kW power dissipation, it can be mounted on the side of an IT enclosure, enabling a single device to cool two enclosures. Rittal says one version of LCP DX blows cool air out to the front and can be employed to create solutions with a cold aisle that cools multiple IT racks.

More sense needed

Going forward, Castelein says the need for data centre providers and end users to collaborate to ensure that our growing dependency on technology and use of data is sustainable has never been greater.

EkkoSense’s Redshaw also sounds a cautionary note when he says that for operators to optimise their thermal performance, it’s necessary to have access to much more granular levels of data – and that effectively requires data centres to actively monitor and report temperature and cooling loads on an individual rack-by-rack basis. “Currently, less than five per cent of UK data centre teams gather this quality of data, so it’s clear that the majority of organisations still have a long way to go if they’re to successfully capture the kind of data needed for even more precise visualisations as well as the ability to audit their data centre thermal performance in real-time.”

But he continues by saying that a dramatic shift in the cost of IoT-enabled telemetry means that it’s now possible for organisations to equip their data centres with the number of rack-level sensors required to measure key factors such as energy consumption, heat and airflows.

“You can also do this for around the same budget that you’re currently spending on basic telemetry. And with a fully-sensed data centre, the sensors actually end up costing less than 20 per cent of the expense of a single – often unnecessary – cooling machine.

“When it comes to thermal optimisation, the cost dynamics have shifted dramatically, and it’s time for organisations to take advantage of the optimisation benefits this can bring.”

According to Vertiv’s Brady, while the industry, has been trying to squeeze the most out of physical infrastructure – such as power and cooling – the actual IT load is “woefully inefficient”.

“Standard servers can consume up to 80 per cent of their power whilst doing nothing. As an industry, we need to start designing facilities that meet the likely – or realistic – load. A data centre that is built to handle a significantly higher load than it will ever need will forever run in a largely inefficient way. Data centres need to be designed with purpose, with fluid technology that can flex to rising and falling capacity demands.”

-(002).png?lu=245)