30 May 2014

One of Colt’s data centres now being run using an orchestration suite that was developed in-house to allow staff and clients to speed up service provision and avoid SDN vendor lock-in.

Yes. But that probably requires some explanation to be truly convincing, says IAN GRANT.

In the mid 1980s, ICL gave a presentation about how it saw the development of the data centre. The leading UK IT firm said that the future centre would run “lights out” – i.e. without any staff except for one man and a dog: the dog was there to stop the man from touching anything, and the man was there to feed the dog.

At the time, ICL had no idea how the scale and scope of what was happening in the data centre would change: Google, Amazon, Microsoft Azure, Rackspace and the rest didn’t exist; Cisco and Juniper were just getting started; network owners rented fixed lines and dial-up modems; and mobile data connectivity was just a dream.

Thirty years on, networks have become too complex to run and too expensive to own, but they are essential to almost every aspect of modern life. So users are doing to networks what they’ve done to computing and data storage: virtualising them. As John Donovan, senior executive VP of technology and network operations at AT&T – which buys more communications kit than anyone else in the world – says: “There’s no army that can hold back an economic principle whose time has come.”

Virtualising the network requires a return to the original, simple principles that underpin the design of IP, according to Nick McKeown, professor of electrical engineering and computer science at Stanford University. Giving the annual Appleton lecture at the Institution of Engineering and Technology in May, he said these principles are the capacity to deal elegantly with corrupted, out of order, duplicated and lost packets. “The fact that it was so simple and so dumb was the reason for its success,” said McKeown.

This simplicity led to low barriers to entry, affordable kit that was plug and play, and consequently high rates of innovation. Placing the intelligence at the network edge meant core networks were easy and cheap to upgrade to cope with rising traffic volumes. Decentralised control allowed the network to grow very fast but organically as long as the rest of the network could recognise and deliver the packets.

But as McKeown pointed out, a market now annually worth $300bn that provides 70 per cent gross margins gives vested interests every reason to protect their cut. As a result, the vendors developed unique proprietary enhancements, and network routers and switches became as complex or more so than mainframes. Worse, they are now surrounded by add-ons such as load balancers, firewalls, DNS/DHCP servers, etc, and attached to virtualised compute and storage servers.

Faced with traffic volumes growing 40 or 50 per cent a year, firms like Google and Facebook started designing their own ‘white boxes’. These are switches and routers based on ‘merchant silicon’– generic microprocessors that do a few things very fast and put the complex functions done by network appliances into software. McKeown said he was researching “primitives”, the smallest functions that enable networking and speed it up even more.

“Switches might have come down in price to $5,000, but firms like Google had plenty of incentive to develop a $1,000 switch, not only for cost, but also for control,” he said. As a result, the network equipment industry is going from being a closed, vertically integrated, proprietary industry to one that is open and horizontal.

SDN defined

For all the hype that surrounds it, all the software defined networking (SDN) initiative has done is to separate the data forwarding from the control mechanisms in routers and switches. As a result, instead of duplicating images of the network in every device, engineers can define all the network intelligence – such as which devices are connected to the network, which network policies are in place, and what to do with a packet when it arrives – in a central controller. All the routers and switches have to do is forward the packets according to the rules sent to them by the controller.

In its April Magic Quadrant report on data centres, Gartner said: “The differentiation between vendor solutions is now relatively balanced between software (management, provisioning, automation and orchestration) and hardware (bandwidth, capacity and scalability).” Thanks to the past three years of hype, more customers are interested in SDN. “Search volume for SDN on gartner.com is now higher than searches for MPLS, WAN optimisation, application delivery controller and router,” said Gartner. It added that customers hope SDN will allow faster provisioning of workloads in the data centre, improve management and network visibility, improve traffic engineering or capacity optimisation of their networks, cut networking costs, improve performance, and reduce vendor lock-in.

While some (mostly new) firms are building their SDN architectures from the bare metal up, others advocate implementing an overlay network. This typically integrates the provisioning of network and compute resources in a more agile infrastructure. But while this is an important development, Gartner warned that the overlay is still fully dependent on a physical underlay network, and issues of network control and visibility are critical to ensure the reliability of overlay solutions.

Those calling for overlays tend to be the incumbent vendors. Some have acquired the most promising start-ups, either to hedge their bets or to take out rivals. Others, such as Ericsson with Ciena, or NEC with IBM, are forging strategic alliances.

The standards soup

Given that the oldest SDN standards body, the Open Networking Foundation (ONF), is only three years old, it will be some time before there are genuine plug and play solutions across the entire network stack. The network space is also different. Unlike compute and storage, where VMware and EMC were able to grab leading market shares quickly, networking is more complicated politically and technically.

Such is the threat to their futures that incumbent vendors quickly countered the formation of the ONF with their own version, the OpenDaylight movement. There is now some agreement that the ONF will develop the APIs for ‘southbound’ traffic, i.e. between L3 (network) and L2 (data), while OpenDaylight will deal with the ‘northbound’ traffic to the higher application layers (L4-L7). Meanwhile, European standards body ETSI will develop specific tools and protocols to virtualise network functions, and all three will coordinate their standards-making efforts.

Roughly speaking, SDN pertains mainly to networks inside the firewall, while NFV (network functions virtualisation) is concerned mainly with wide area and carrier networks. Parallel to this, and being incorporated in the SDN ecosystem, is the OpenStack cloud computing initiative. But at a technical meeting in May, users complained that OpenStack’s networking component, Neutron, doesn’t work at scale. Smaller implementations appear unaffected, and it is only because more firms are basing their commercial and public cloud ecosystems on OpenStack that problems have occurred.

The software was originally contributed by SDN pioneer Nicira before it was acquired by VMware. According to some, Neutron now works at scale only if Nicira’s NSX plug-in is used.

At the meeting, Red Hat also announced a beta version of its Linux OpenStack platform 5.0, which goes some way to address the Neutron issue with a new L2 plug-in. OpenStack enhancements include a new compute API and an updated OpenStack block storage backup API called Cinder. Red Hat claims the plug-in eases the addition of new L2 networking technologies and continues to support existing plug-ins, including Open vSwitch, an open source virtual switch that is part of the Linux kernel. It also enables single root I/O virtualisation PCI passthrough, enabling traffic to bypass the software switch layer to improve network performance.

“This is important for firms with heterogeneous network environments who want to mix plug-ins for networking systems,” said Red Hat. It has also developed an OpenDaylight driver for the new L2 plug-in that enables communication between Neutron and OpenDaylight to create a solid foundation for coming NFV technologies.

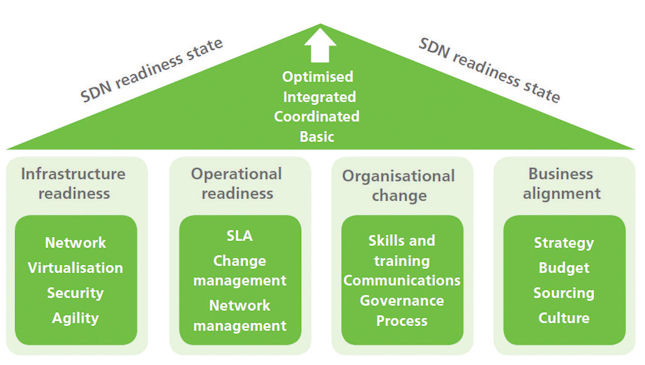

Dimension Data’s new SDN Development Model aims to map how to get clients from their “as-is” network state to their “wannabe“ state as quickly and simply as possible with the available resources.

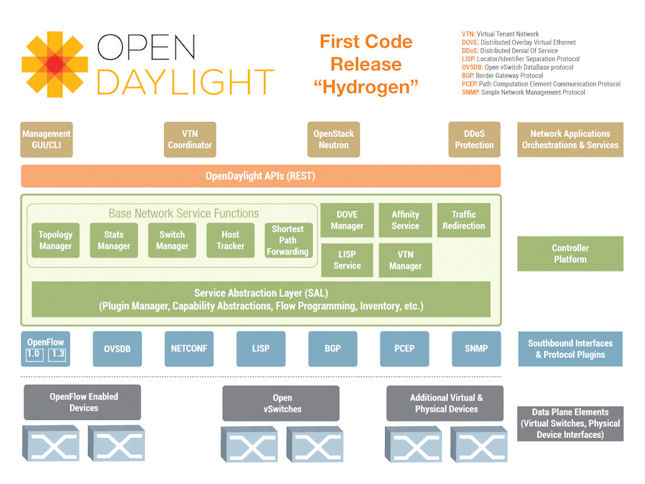

OpenDaylight is providing a standard platform (shown in green) to handle ‘northbound’ network traffic in a virtual network controller.

Why are we waiting?

Standards are coming, but they are slower than expected because everyone involved is stressing that the code that emerges must be open source. ONF is already looking at conformance testing for v1.3 of its OpenFlow protocol for exchanging data between the controller and the network elements. ETSI published four group specifications in October 2013 to cover NFV use cases, requirements, the architectural framework, and terminology. It expects to publish more detailed specifications later this year.

OpenDaylight has published Hydrogen, its code for handling traffic above L3, in three ‘editions’: Base, for researchers and academics; Virtualised, for data centre operators; and Service Provider, for host data centre operators (diagram top right).

The OpenDaylight controller exposes open northbound APIs which are used by applications. The platform itself contains a collection of dynamically pluggable modules to perform essential network tasks such as understanding what devices are contained within the network and the capabilities of each, statistics gathering, etc. In addition, other extensions can be inserted into the controller platform for extra SDN functionality.

But some aren’t waiting. Colt has built its own ‘orchestration’ system to manage data centre assets, as Fahim Sabir, director of engineering for IT services, explains: “There are a number of orchestration platforms out there from various vendors, but it is not a mature market and there is still a long way to go. For example, the platforms on the market lack robust reporting and analytics capabilities. Orchestration in the data centre is not just about managing virtual resources; physical assets need to be factored in as well.

“Also, most vendors’ orchestration platforms tend to work best in conjunction with their own infrastructure technologies. They provide limited support for the infrastructure technologies from other vendors, especially those that compete in the same technology space. For companies that want to operate in a vendor-neutral environment it requires significant compromise to choose one of these solutions. As a result, Colt decided to build its own platform.”

The firm chose modules based on whether they are orchestration-friendly, and expose well-documented and open APIs to be able to dial up and down according to capacity, performance and availability requirements. Sabir says this is key to an orchestration capability because it means Colt can perform operations without having to redeploy anything and simplifies the work the platform has to do.

“Our vision is to give internal teams and our customers the same slick experience for deploying infrastructure. The orchestration engine is a cornerstone in that. The platform is in production today and we have set up a team responsible for developing the platform further, as well as building the orchestrations that execute on it.”

Practicalities

Given how immature SDN technology is, and how radical an effect it is going to have on networks in data centres and WANs, a lot of learning needs to happen.

Systems integrator Dimension Data (DD) says it has invested some $30bn in building and managing more than 9,000 private IP networks worldwide, enabling over 13 million users to connect to their organisations’ networks. It has also built nine data centres and is adding two more.

“SDN offers us additional choices when architecting our clients’ networks,” says group executive for networking Rob Lopez. “More importantly, SDN creates opportunities for cost savings through more efficient operations, as well as a more effective delivery of network services.”

Business development manager Gary Middleton adds that DD’s own data centre operation was the prototype for a structured way of assessing the applicability and viability of SDN to an organisation that launched in late May. “Our CIO was our pilot client for the SDN Development Model. He saw some benefits in certain areas, and that’s resulted in a plan where we’re looking at SDN in those areas.”

The competitive position of DD’s hosted cloud is said to be based on its networking capability. It is looking to SDN to sharpen its edge to gain better, faster service provision, and lower cost from reducing headcount could also factor. Middleton notes that DD’s provisioning of virtual LANs and network services is already highly automated. “We’re looking to improve that further by automating things like the provision of MPLS links. It’s all about providing an elastic capability for networking up and down as needed.”

But he reckons the jury is still out on capex savings due to white label switching boxes – Dimension Data’s research into that sector threw up a “lot of names no-one has heard of”, and deeper inspection found issues with their capacity to scale and support their products. But Middleton acknowledges this may be temporary.

In the meantime, it gives firms like Cisco, with whom DD has a long and deep relationship, time to get their SDN act together. Couple that with large businesses’ innate conservatism, and Middleton expects incumbent network equipment suppliers to enjoy a period of grace.

“We think 60 or 70 per cent of our clients are going to wait until their incumbent suppliers get their SDN strategy right before deploying it. At the present time, I don’t think anyone’s SDN offer is compelling enough to persuade clients to change their hardware vendor. They’ll implement brand name switches, but then automate a lot of the functionality on top of it, so the savings will come in the form of operational savings. Instead of having an engineer touch every device to configure a network, all that will be automated.

“We do see a space for software-based SDN like NSX from VMware. That’ll be an important element for a sector of the client base. It will all come down to client choice and whether they buy into the software define data centre and the VMware approach, or whether they view hardware as a strong component.”

In the interim

Most SDN solutions will disrupt IT and business operations because they require a complete revamp of network infrastructure and services, according to Alcatel-Lucent Enterprise. It has developed two online demos using its Application Fluent Network (AFN) with standards-based sFlow and OpenFlow initiatives plus InMon for enterprise scale SDN analytics. It claims they present a scalable solution that enables inherent, application intelligent SDN capabilities, keeping costs under control.

One demo deals with competition for network resources of different workloads. Large flows due to VM migrations, storage, backup, and replication can interfere with smaller flows such as web requests, database transactions, and social media actions that are sensitive to delay. Alcatel-Lucent says it shows how these large flows can be identified and controlled so that both types of traffic obtain optimal performance. The other demo shows how a DoS attack can be detected and enforced in a distributed fashion across the network in real-time.

Companies that prefer to wait for more clarity to emerge from the SDN fog might care to look at network automation specialists such as Infoblox. It has just refreshed its line of solutions for managing DNS, DHCP and IP addresses, known as DDI, running on BMC, CA, Cisco, ElasticBox, HP, Microsoft and VMware.

The vendor claims that by using DDI, VMs can be provisioned with IP addresses and DNS records in minutes instead of hours or days with addresses recovered and reused, and DNS records cleaned up automatically when VMs are retired. Marketing EVP David Gee says: “IT workloads are shifting to private clouds and these clouds require automation in the network layer to match the already heavilyautomated compute and store functions.”

Then there’s load balancing. But here, you need to handle traffic and applications differently, says Lori MacVittie, cloud computing and application security expert at F5 Networks. “Application load balancing arose because network load balancing was all based on inbound variables. It couldn’t take into consideration how loaded the chosen server was, or whether its response time was failing, or whether it was at capacity or not. Those variables were all on the server side, and required visibility into the application, not the client.

“It also couldn’t account for the fact that virtual servers were popping up everywhere, with multiple applications served from the same IP address and port, and forced the web server to become a load balancer itself. That was kind of crazy. If a single server couldn’t scale well enough to meet demand, how is putting a single server in front of them going to help the situation?”

As a result, the hashing techniques used to distribute loads changed, with network variables used to balance traffic, and application variables used to balance apps. This allowed architectures to specialise, with requests for images and static content each routed to their respective dedicated servers. It also enabled persistence (sticky sessions) which greatly accelerated the ability to scale out stateful applications in a web format (i.e. to remember their last ‘incarnation’). MacVittie argues that L3 switches can easily support network load balancing but not application balancing because they don’t have access to the application variables and therefore cannot hash them in order to distribute them.

“Thus, while SDN principles are certainly applicable, the architecture used to implement SDN for lower order network layer services is not going to be the same one used to implement SDN for higher order network layer services,” she says.

“When evaluating SDN solutions, it’s important to consider how any two SDN network (core and application) architectures complement one another, integrate with one another, and collaborate to enable a complete software-defined network architecture that supports the unique needs of both layers 2-3 and layers 4-7.”

-(002).png?lu=245)